LangChain Agents: Building Intelligent AI Assistants with Gemini Integration

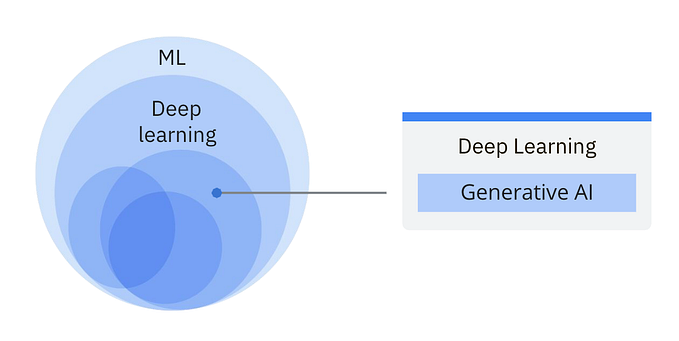

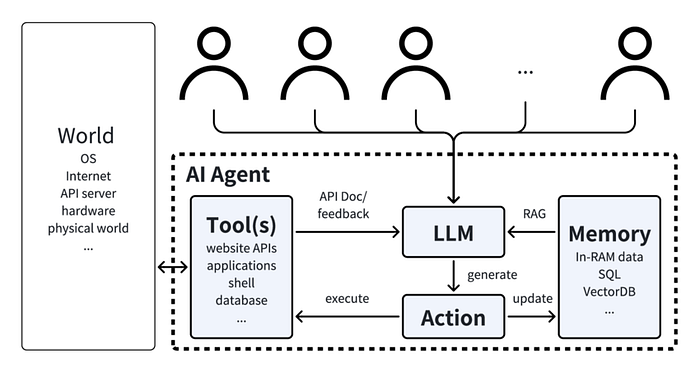

In the rapidly evolving landscape of artificial intelligence, LangChain has emerged as a powerful framework for developing sophisticated applications using Large Language Models (LLMs). This article explores one of LangChain’s most compelling features — Agents — and how to integrate them with Google’s Gemini models.

Understanding LangChain Agents

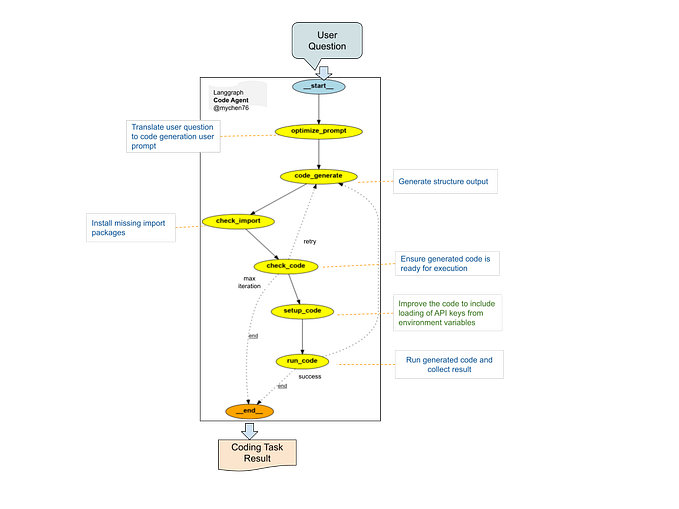

LangChain Agents are intelligent components that add autonomy and problem-solving capabilities to your AI systems. These agents can use various tools, make logical decisions, and execute complex operations in a sequential manner to accomplish given tasks.

Setting Up Your Environment

Before diving into agents, let’s set up our environment with LangChain and Gemini:

from langchain_google_genai import ChatGoogleGenerativeAI

from langchain.agents import AgentType, Tool, initialize_agent

from langchain.chains import LLMChain

from langchain.prompts import PromptTemplate

# Initialize Gemini Pro model

llm = ChatGoogleGenerativeAI(

model="gemini-pro",

google_api_key="YOUR_GOOGLE_API_KEY",

temperature=0.7

)Core Components of LangChain

1. Prompt Templates

Prompt templates are essential for structuring your interactions with the model:

prompt = PromptTemplate(

input_variables=["topic"],

template="Write a comprehensive analysis about {topic}"

)

chain = LLMChain(llm=llm, prompt=prompt)2. Conversation Chains

Conversation chains maintain context throughout interactions:

from langchain.memory import ConversationBufferMemory

from langchain.chains import ConversationChain

conversation = ConversationChain(

llm=llm,

memory=ConversationBufferMemory()

)Types of LangChain Agents

1. Zero-Shot React Agent

- Utilizes predefined tools without prior training

- Adapts to new situations dynamically

- Perfect for general-purpose tasks

tools = [

Tool(

name="Search",

func=search.run,

description="useful for searching information"

),

Tool(

name="Calculator",

func=calculator.run,

description="useful for mathematical calculations"

)

]

agent = initialize_agent(

tools,

llm,

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

verbose=True

)2. Conversational React Agent

- Engages in natural dialogue

- Maintains context throughout the conversation

- Breaks down complex tasks into manageable steps

3. Structured Tool Chat Agent

- Works with structured tools and outputs

- Maintains consistent formatting

- Ideal for specific-format requirements

Integrating Gemini with LangChain

Gemini offers two powerful models that can be integrated with LangChain:

- gemini-pro: For text-based tasks

- gemini-pro-vision: For multimodal tasks

# Text-based agent

text_agent = ChatGoogleGenerativeAI(

model="gemini-pro",

temperature=0.7

)

# Vision-capable agent

vision_agent = ChatGoogleGenerativeAI(

model="gemini-pro-vision",

temperature=0.7

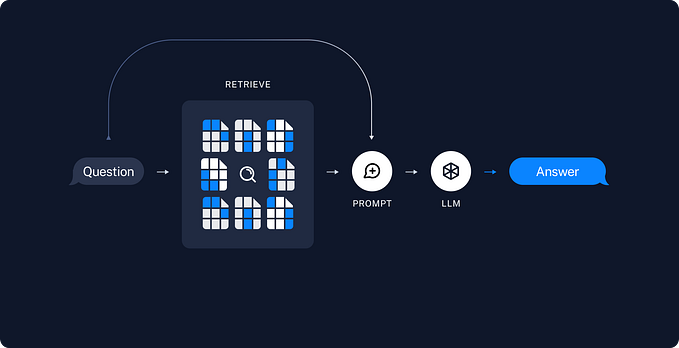

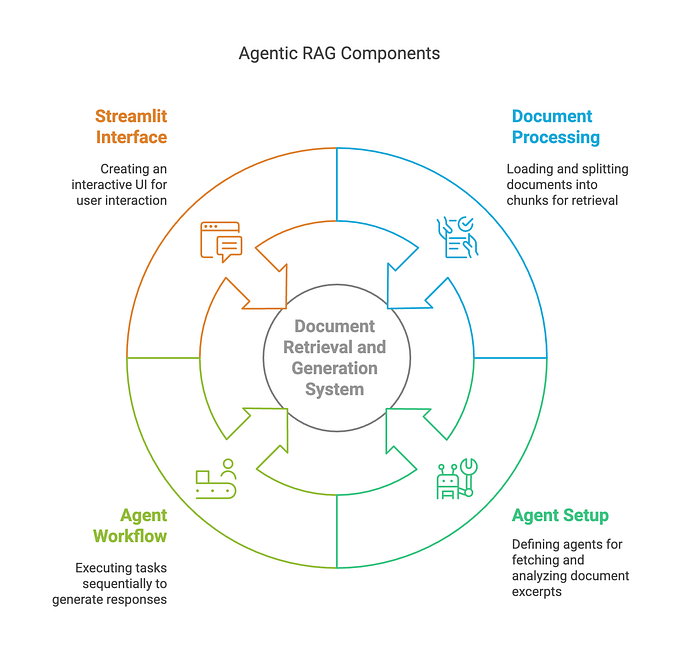

)RAG (Retrieval Augmented Generation) with LangChain

LangChain supports RAG systems that can enhance your agents with external knowledge:

Text Splitting

from langchain.text_splitter import RecursiveCharacterTextSplitter

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200

)Embeddings

from langchain_google_genai import GoogleGenerativeAIEmbeddings

embeddings = GoogleGenerativeAIEmbeddings(

model="models/embedding-001"

)Vector Storage

from langchain.vectorstores import Chroma

db = Chroma.from_documents(

documents=texts,

embedding=embeddings

)Best Practices for Agent Implementation

Tool Selection

- Load only necessary tools

- Prioritize tools based on task requirements

- Consider computational costs

Error Handling

- Implement robust error catching mechanisms

- Provide fallback options

- Log agent actions for debugging

Performance Optimization

- Use appropriate temperature settings

- Implement caching when possible

- Monitor token usage

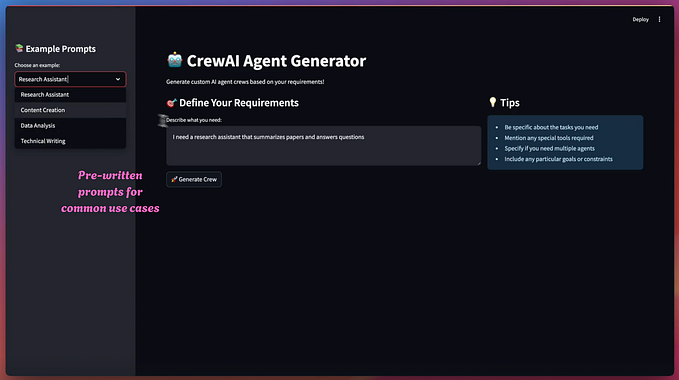

Practical Use Cases

Data Analysis

- Collecting data from various sources

- Performing calculations

- Generating reports

Customer Service

- Understanding user queries

- Providing solutions

- Escalating when necessary

Content Generation

- Research and analysis

- Content creation

- SEO optimization

Advanced Agent Patterns

Chain of Thought

cot_prompt = PromptTemplate(

template="Think through this step by step:\n{input}",

input_variables=["input"]

)

cot_chain = LLMChain(llm=llm, prompt=cot_prompt)Tool Composition

from langchain.agents import Tool

from langchain.tools import BaseTool

class CustomTool(BaseTool):

name = "custom_tool"

description = "Custom tool for specific tasks"

def _run(self, query: str) -> str:

# Tool implementation

passThank you for reading! LangChain Agents, especially when integrated with Google’s Gemini models, provide a powerful framework for building intelligent AI applications. By combining the strengths of LangChain’s agent architecture with Gemini’s advanced language models, developers can create sophisticated AI systems capable of handling complex tasks autonomously.

The integration of RAG systems further enhances these capabilities by allowing agents to access and utilize external knowledge effectively. As the field continues to evolve, the combination of LangChain and Gemini represents a robust solution for building next-generation AI applications.